In this post, we we breifly discuss about several tools that can be useful while developing and structuring machine learning based projects. Specifically, we will focus on the fundamental tools related to MLOps.

Introduction to MLOps

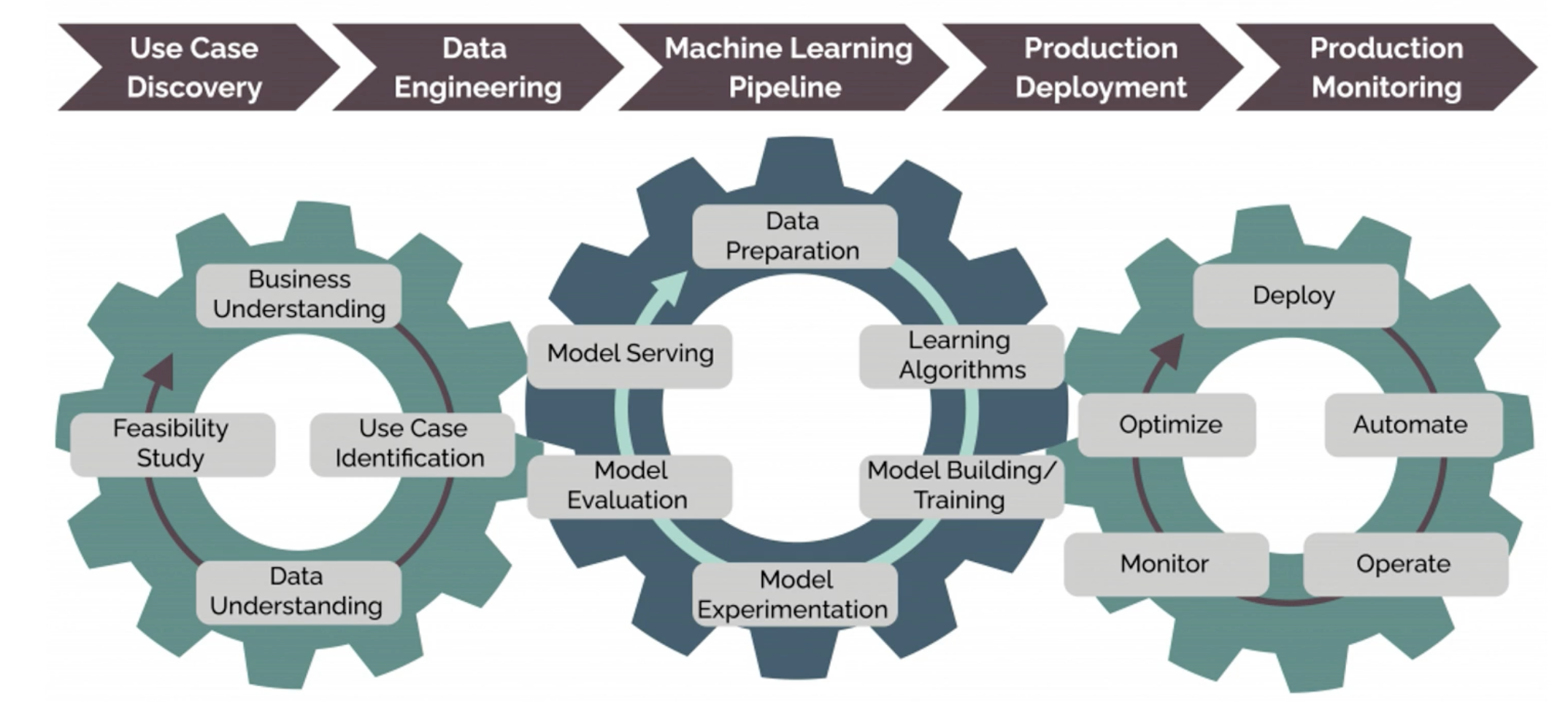

A machine learning model must be scalable, collaborative and reproducible. The principles, tools and techniques that make models scalable, collaborative and reproducible are known as MLOps. Developing a model using MLOps follows the process of the following:

- Use Case Discovery

- Data Engineering

- Machine Learning Pipeline

- Production Deployment

- Production Monitoring

Cookiecutter

Cookiecutter is a tool for creating projects folder structure automatically using templates. We can create static file and folder structures based on input information.

-

We can install cookiecutter using

pip install cookiecutter. -

Once it is installed, we can make use of the following command to use a data science template –

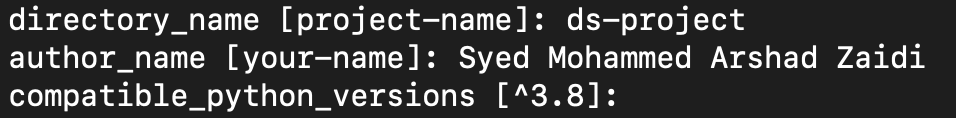

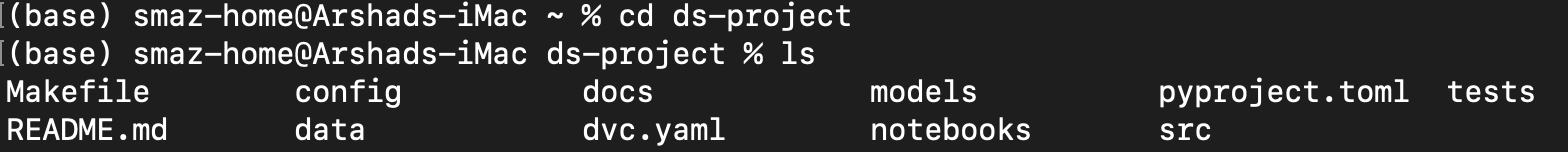

cookiecutter https://github.com/khuyentran1401/data-science-templateWe will be prompted to enter the information as shown below:

Once these information is give, we can navigate to the project directory and check the files that are created using cookiecutter.

Poetry

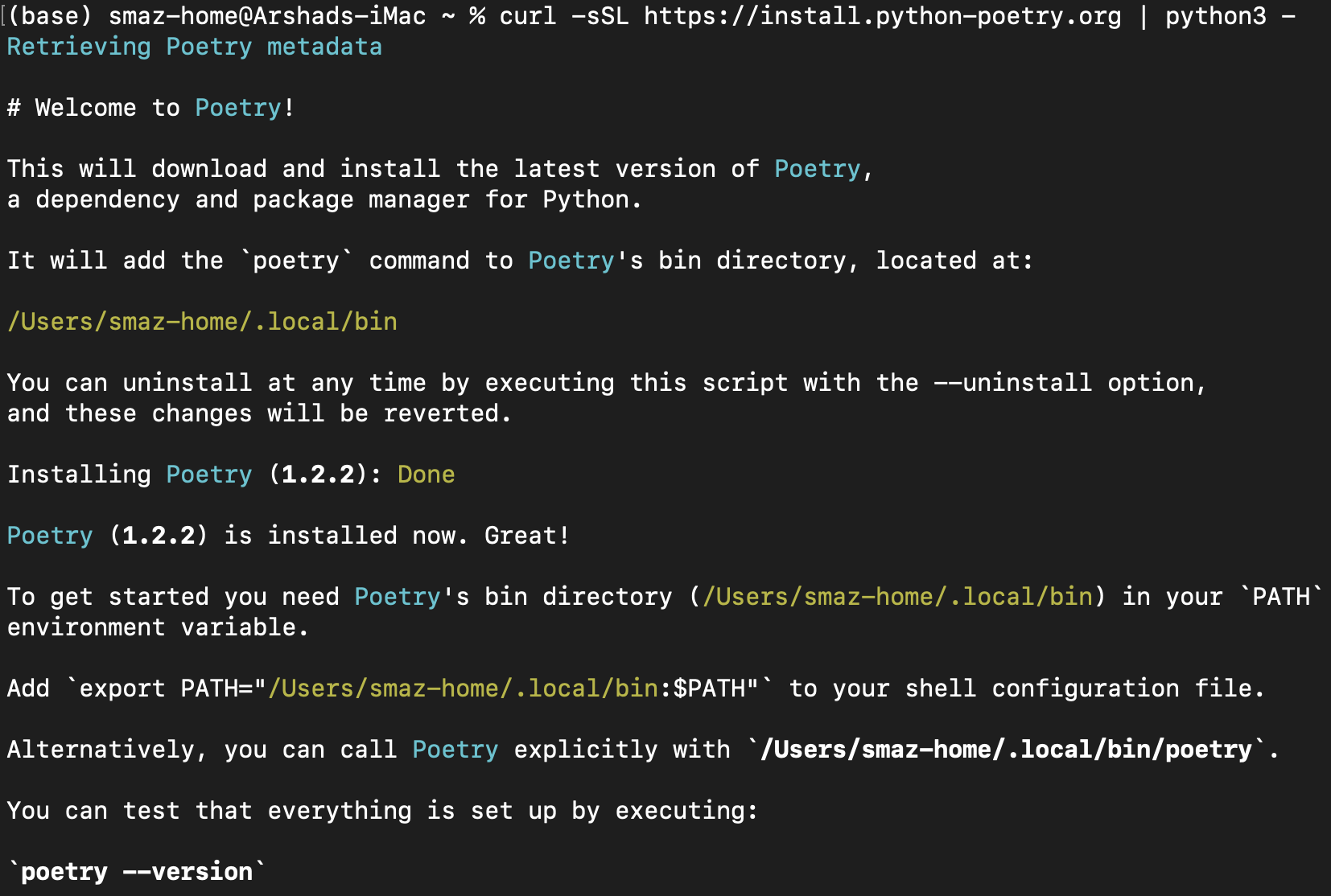

Poetry is a ML tool that allows you to manage dependencies and their versions. Many times when we do library installations from pip with requirements in a new environment, we often face challenges with using the appropriate version of dependencies.

Install poetry curl -sSL https://install.python-poetry.org | python3 -

An alternative to installing libraries with pip is using Poetry. It allows us to:

- Separate main dependencies and sub dependencies into two separate files (vs requirements.txt)

- Creation of readable dependency files.

- Remove all unused sub-dependencies when removing a library.

- Avoid installing new libraries in conflict with existing libraries.

- Package the project with few lines of code.

All the dependencies of the project are specified in pyproject.toml.

After installing poetry, we can make use of the following commands:

poetry new <project-name>– Generate project.poetry install– Install dependencies.poetry add <library-name>– To add a new PyPI library.poetry remove <library-name>– To delete a library.

MakeFile

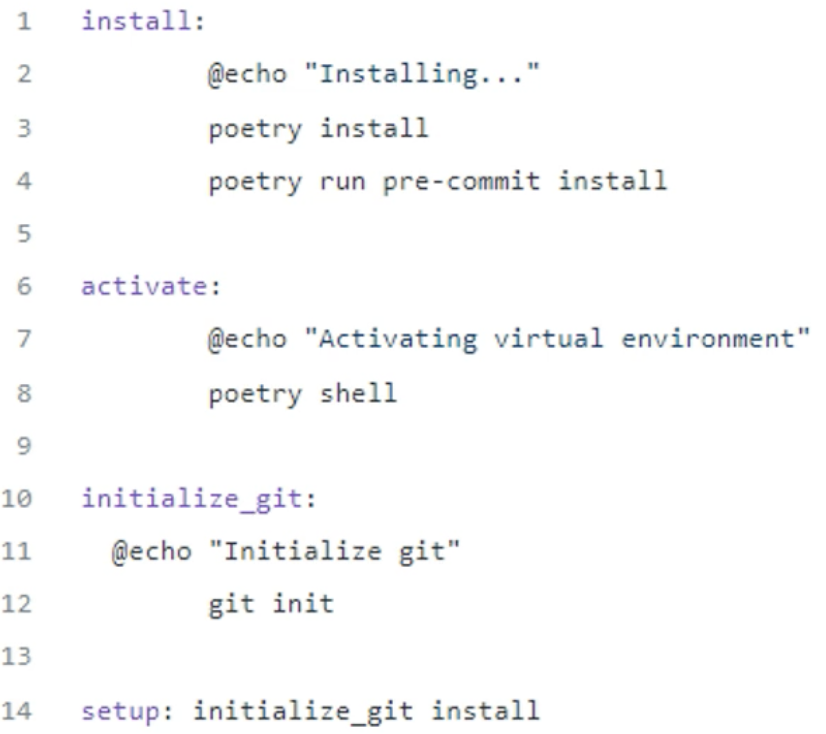

MakeFile creates short and readable commands for configuration tasks. You can make use MakeFile to automate tasks such as setting up the environment. Assuming that our makefile is the following form:

We execute the functions declared inside the makefile using

make activateormake setup

Hydra

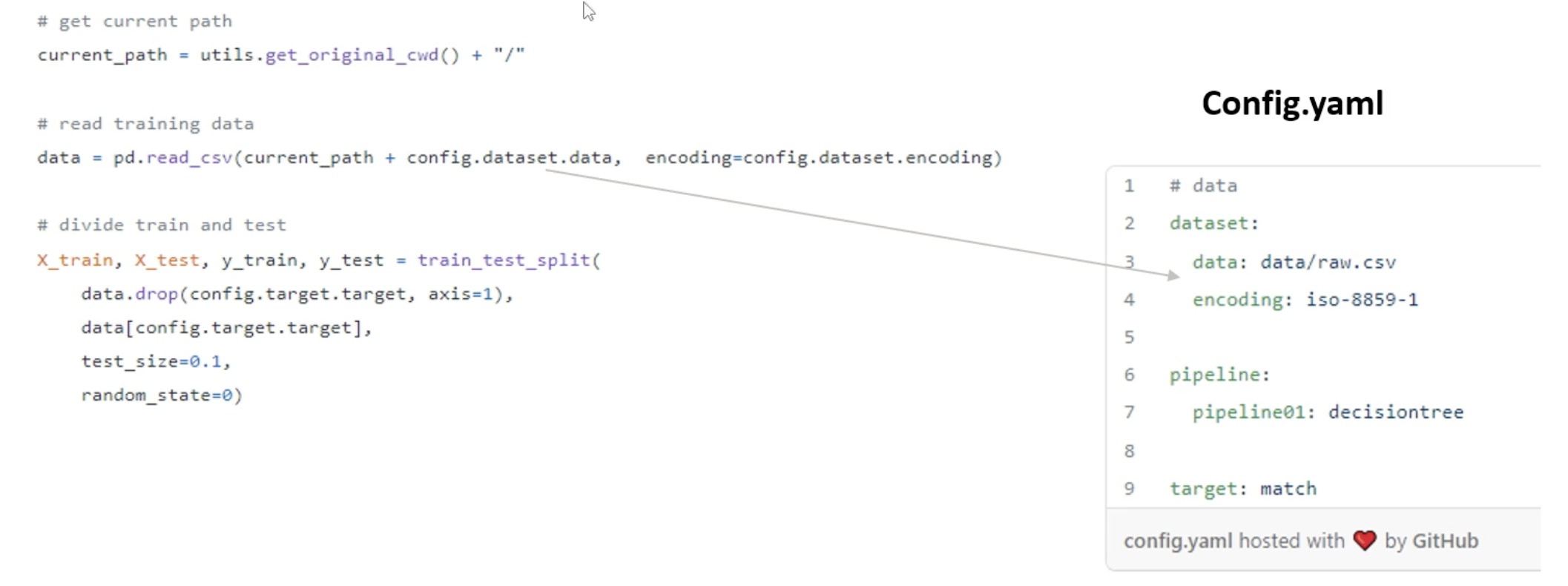

Hydra manages configuration files making project management easier. In data science, it is common to execute different configurations and models, so configuration should not be hardcoded and it is not recommended to hard-code configuration values inside the code. With hydra, we can use configuration files in order to avoid hardcoding. For example, if we want to modify the input variables of model because the input datsets has changed. It will take a long time to identify the parts of the code where input variables are specified and modify them. Also, if we want to conduct several tests, it will take a long time to change them manually. For example, lets assume we have the following code:

columns = ['iid', 'id', 'idg', 'wave', 'career']

df.drop(columns, axis = 1, inplace = True)

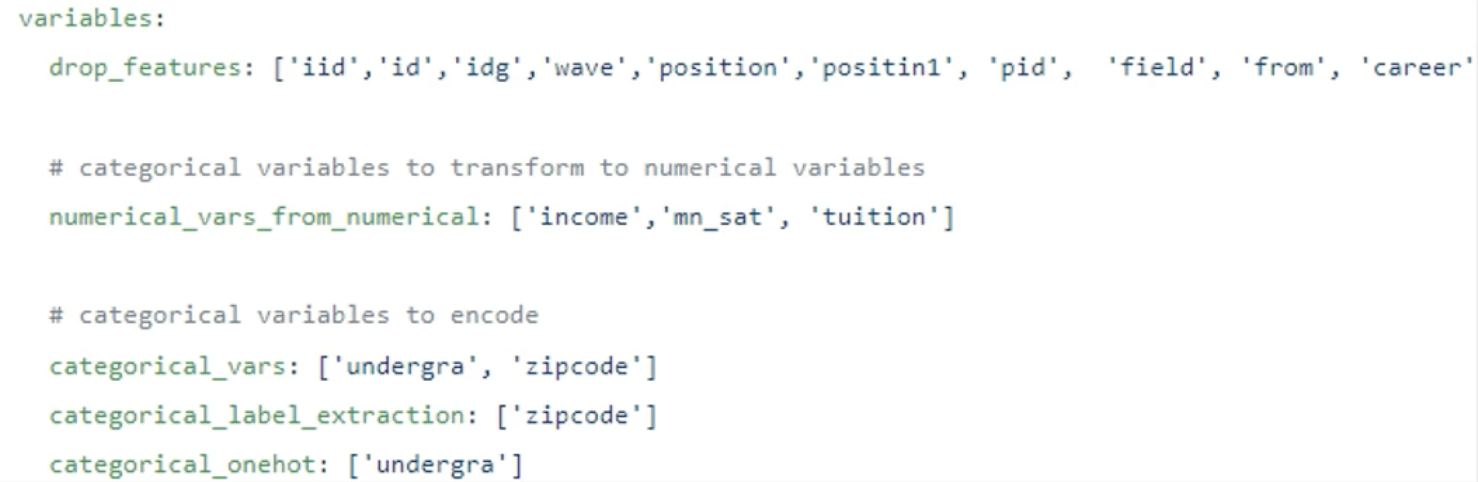

Here, we want to drop some list of columns. Although, its fine to specify this here, but wouldn’t it be better to set the columns in a config file? So, here is a config file that has all the information:

As, we can see, it is much better to modify or remove the list of variables from a configuration (config) file. If variables change, we can modify them directly from this isolated file.

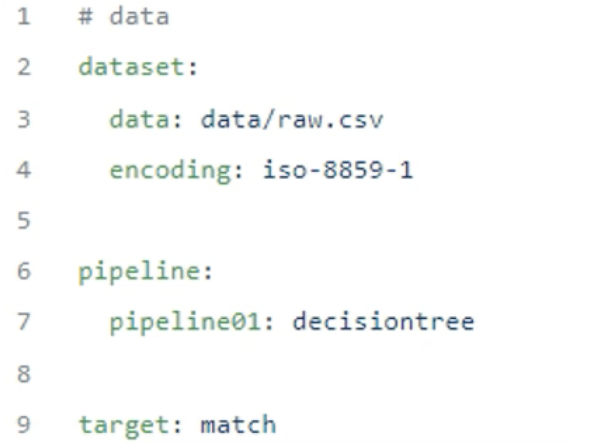

Configuration file

A configuration file contains parameters that define the configuration of the program. It is a good practice to avoid hard coding in python scripts. YAML is a common language for a configuration file.

In order to manage these configuration files, we use hydra, although there are also tools like PyYaml file that can be used for this purpose. Hydra has a lot of benefits such as:

- We can change parameteres from terminal

- It allows us to switch between different configuration groups easily.

- It allows automatic record execution results showing the code and the configuration used.

Here is a configuration file that has different parts.

When we, use hydra information is not within quotes, even if this information is composed of strings. Hydra interprets them as strings.

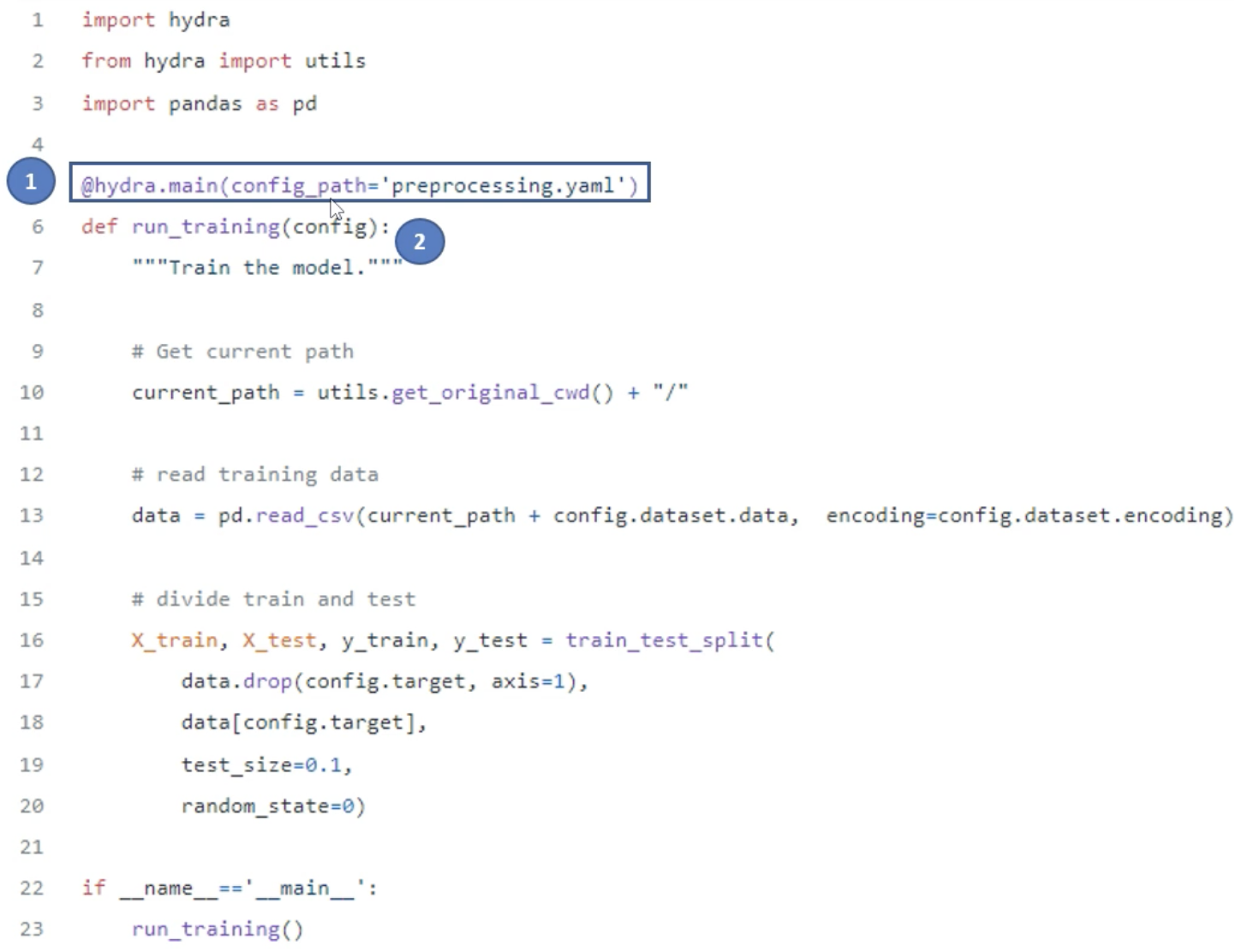

To use hydra in our projects, we have to use hydra declarator with config_path argument depending on the hydra version. If we want to use the configuration file inside a function, we must enter config as an argument.

AutoML

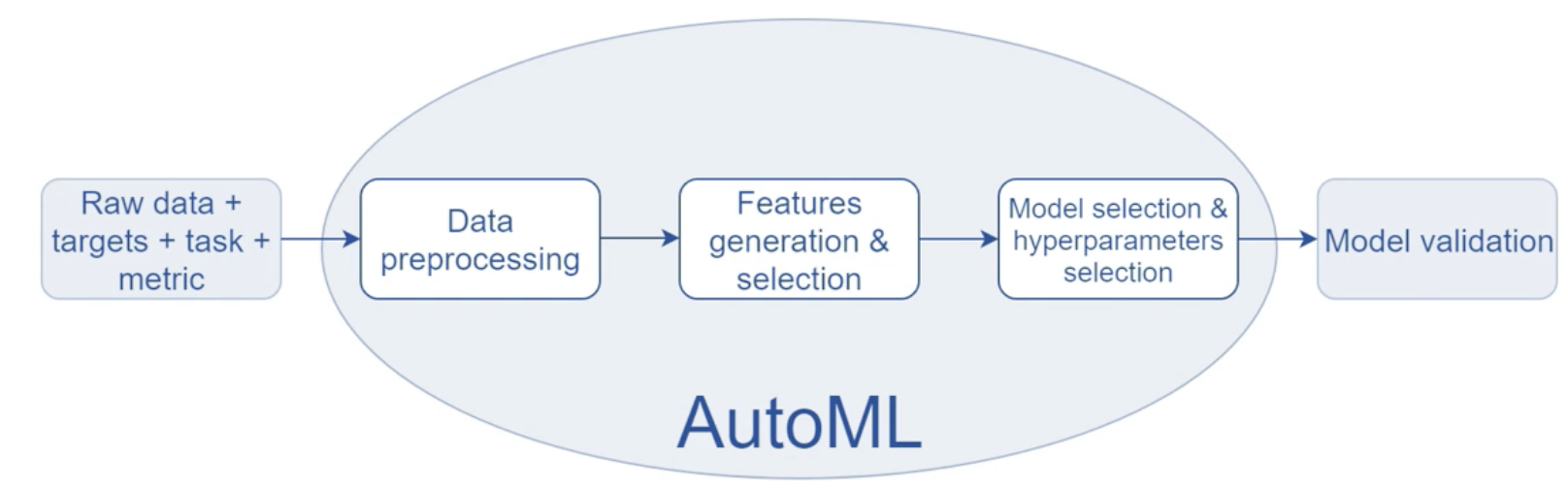

Automated machine learning, also referred to as automated ML or AutoML, is the process of automating the time-consuming, iterative tasks of machine learning model development. It helps in the following steps of developing machine learning models:

- Preprocessing of the data.

- Generating new variables and selecting the most significant ones.

- Training and selecting the best model.

- Adjusting the hyperparameters of the chosen model.

- Making model evaluation easy.

- Helps in model deployment.

PyCaret

It is an open-source, low-code machine learning library. It also offers the auto machine learning library by calling few functions when developing machine learning models from start to finish. This reduces the considerable time and effort at the data scientist’s side.

DVC

DVC stands for Device Version Control. It is used for version control of model training data.

pdoc

pdoc is used for automatically create documentation for our project.